360 Product Photography from Video

3D models are an awesome tool for retailers to help customers get a better sense of what they're buying. However, they can be pricey and take a lot of time to create. Similarly, traditional 360 product photography also requires professional equipment and expertise. With this method, anyone can take video captured from a modern iPhone device and generate a result that can be viewed like 360 product photos.

Swipe to move around

About the project

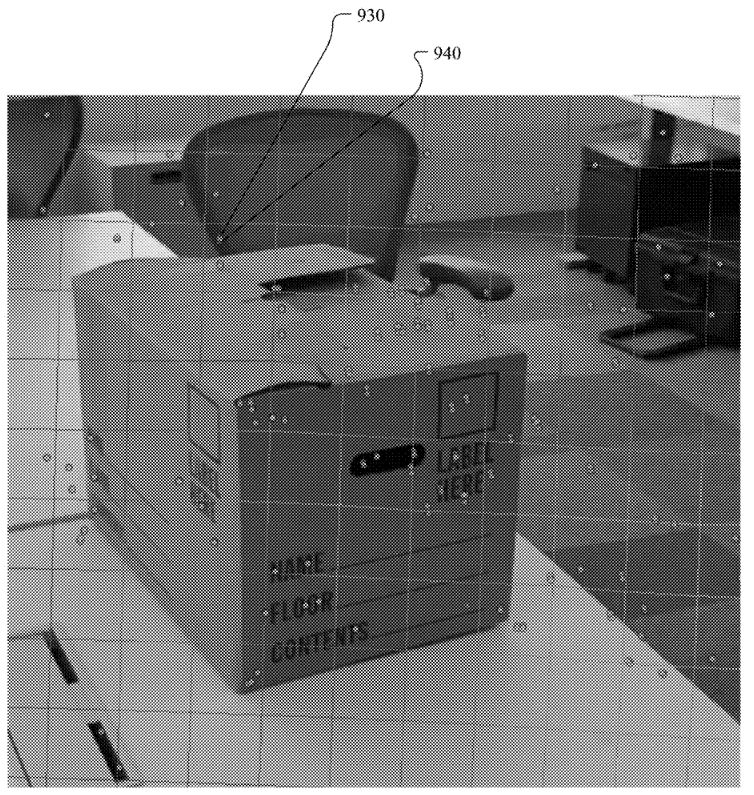

A small group of us at Computational Photography developed a feature for Facebook Marketplace called Rotating View. Anyone with a relatively newer iPhone device capable of running ARKit could capture a short video moving around what they were trying to sell, and we'd be able to generate a 360 product photo from it to attach to their product listing.

Capturing a 360 product photo in FB Marketplace

How it Works

The method to create the 360 product photo is explained in depth in our patent Outputting warped images from captured video data (US20210366075A1). The overall goal is to create a video where each frame shows a different angle of the subject, and the subject doesn't move from the center of the screen. Two major challenges are that original video's timing and pacing is off since humans don't move linearly, and that the videos tend to be either really shakey or the subject drifts around. Most video stabilization algorithms are aimed towards stabilizing the background, whereas in this case, the video needs to be stabilized around the subject. There are three major steps to our approach: reconstructing camera poses in 3D space, finding a smooth camera path, then selecting frames and warping them to align and fit together.

Raw video capture

Swipe to move around

Reconstructing camera poses. The first step is for each frame in the input video, estimating where in 3D space the photo was taken. There are many ways to approach this problem, but one approach stands out in particular. Simultaneous localization and mapping (SLAM) refers to constructing a representation of the environment while keeping track of where we are inside of the environment, typically by incorporating computer vision with sensor data (sensor fusion). It's used in many applications ranging from robotics navigation and understanding to augmented and virtual reality. iPhone's ARKit has a pretty good SLAM algorithm built-in that can be utilized on iOS. On desktop, there is a pure computer vision based algorithm known as ORB-SLAM2.

Demo of Orb-SLAM2 algorithm (source)

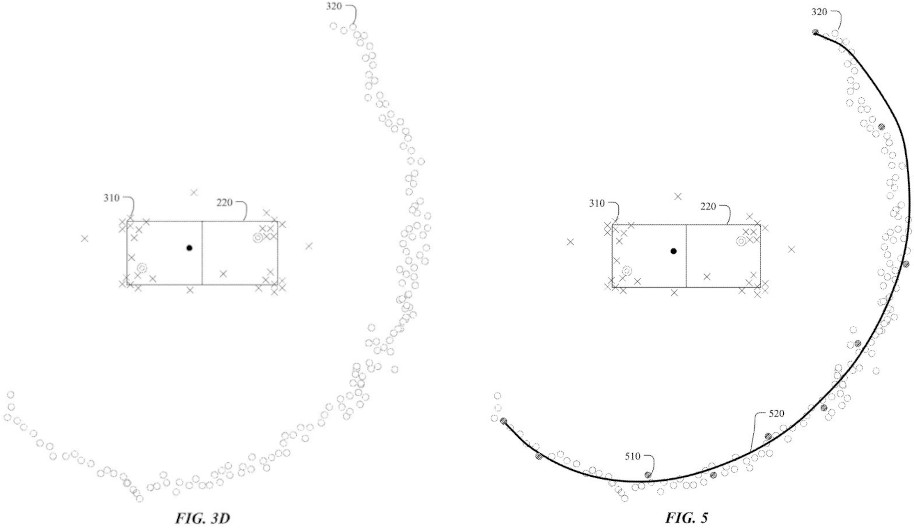

Finding a smooth virtual camera path. Once the camera positions are estimated, there's some post-processing that needs to be done to take care of outliers, doubling back, and handling gaps in the data before constructing a smooth arc-shaped curve through them to stabilize the video. On this curve, spaced out points can be picked out that correspond to the desired viewpoints, and find the closest camera position to each point.

Figures showing virtual camera path

Generating output frames. Lastly, given the original camera position for each point, the desired viewpoint, and pointcloud data from the first step, the images can be warped in such a way that they look like they were taken from the ideal viewpoints. One method of doing this is known as grid-warp or mesh-warp.

Figures showing image grid-warp

Examples

Raw video capture

Swipe to move around

Raw video capture

Swipe to move around

Leave a comment